PointNSP: Autoregressive 3D Point Cloud Generation with Next-Scale Level-of-Detail Prediction

Ziqiao Meng1

Qichao Wang2

Zhiyang Dou3

Zixing Song4

Zhipeng Zhou2

Irwin King5

Peilin Zhao6

1National University of Singapore

2Nanyang Technological University

3University of Hong Kong

4University of Cambridge

5The Chinese University of Hong Kong

6Shanghai Jiao Tong University

[Paper]

[Code]

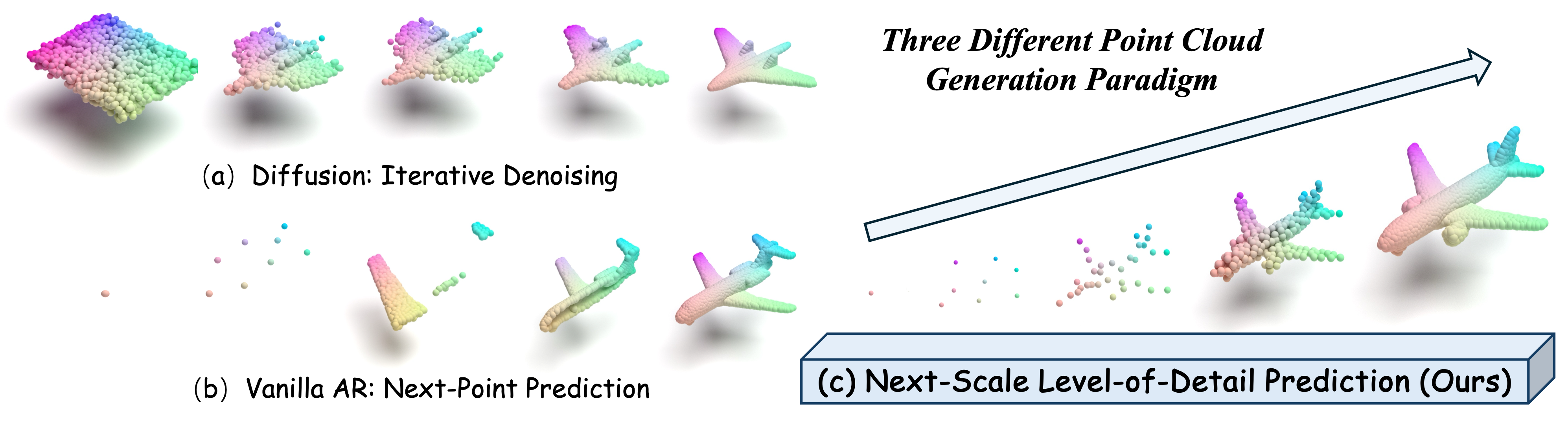

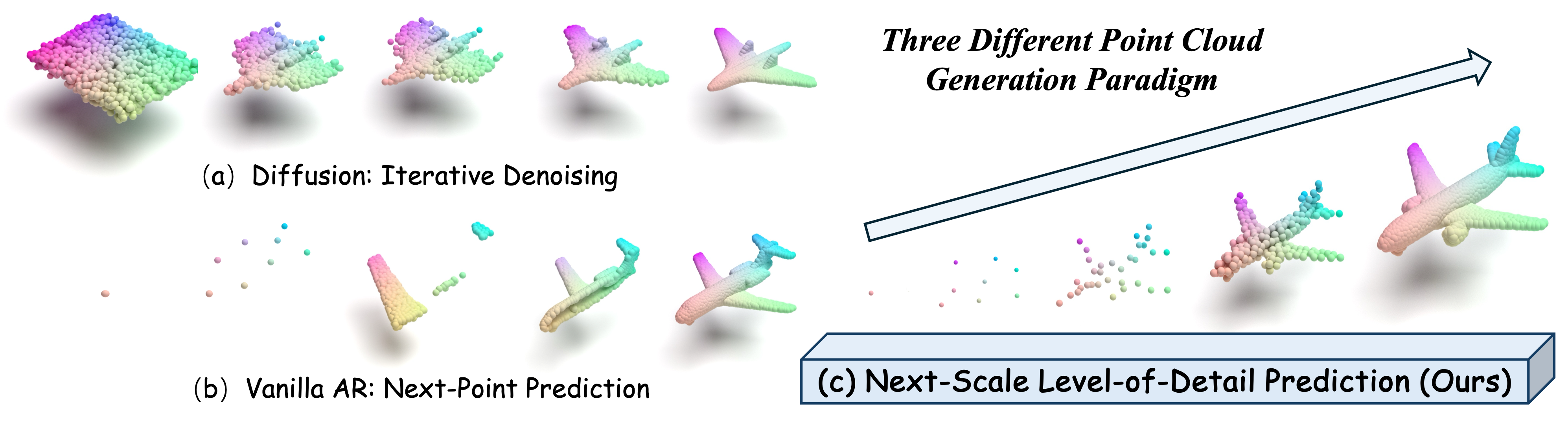

Three types of point cloud generative models: (a) diffusion-based methods that iteratively denoise shapes starting from Gaussian noise; (b) vanilla autoregressive (AR) methods that predict the next point by flattening the 3D shape into a sequence; and (c) our proposed PointNSP, which predicts next-scale level-of-detail in a coarse-to-fine manner.

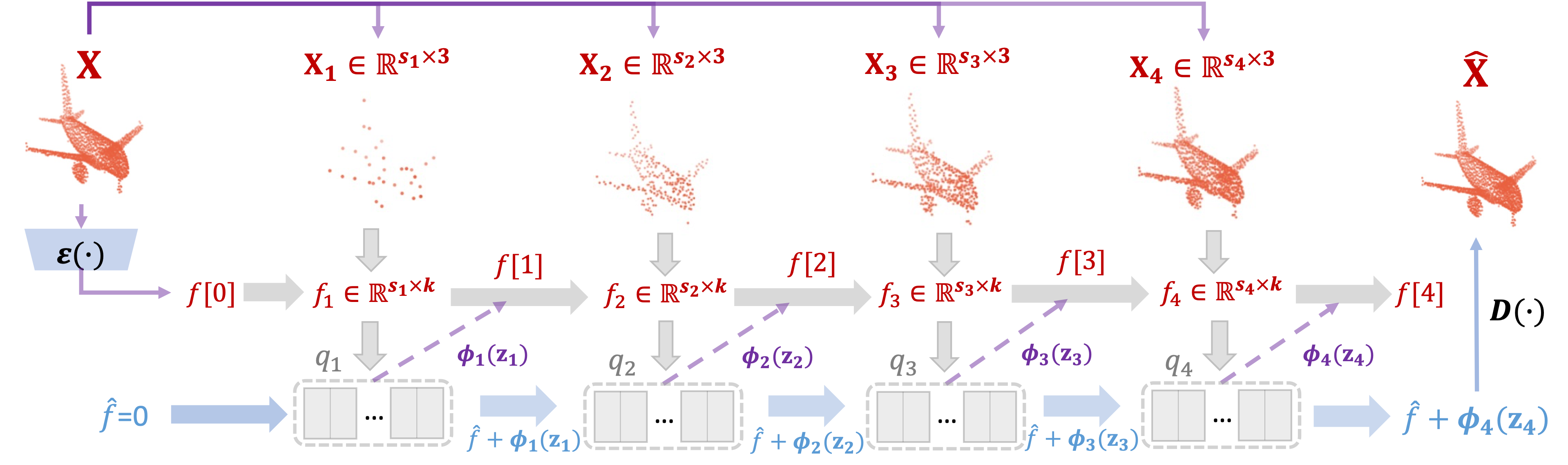

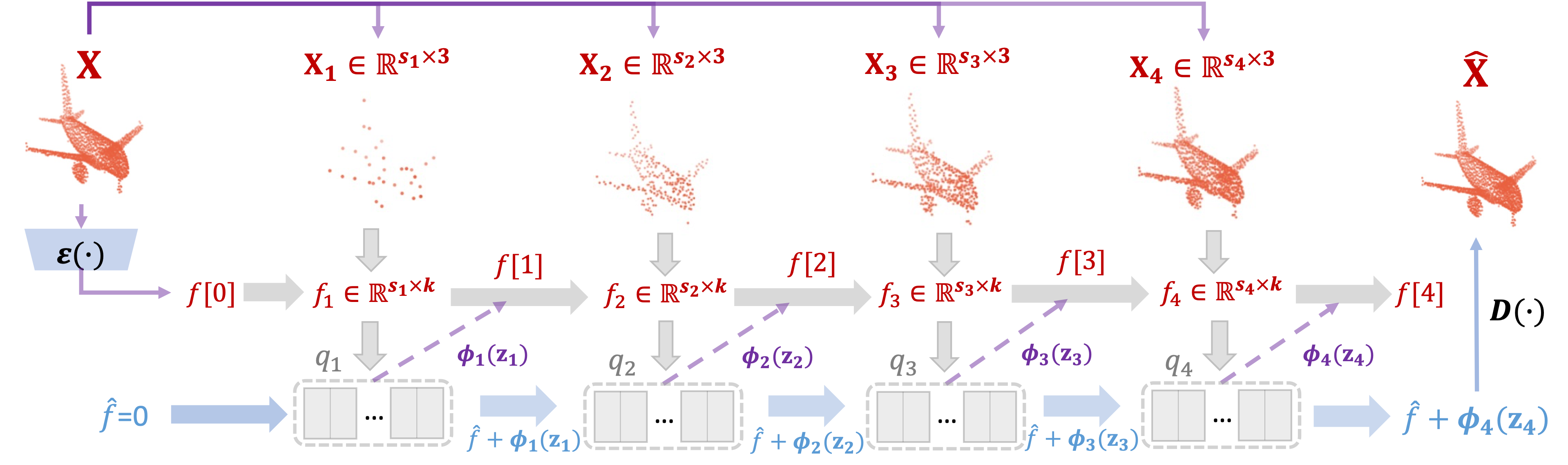

Overview of PoinNSP: Illustration of training a multi-scale VQ-VAE for 3D point cloud reconstruction across scales

\( s_{1} \) to \( s_{4} \), resulting in a multi-scale token sequence

\( Q = (q_{1}, \dots, q_{4}) \).

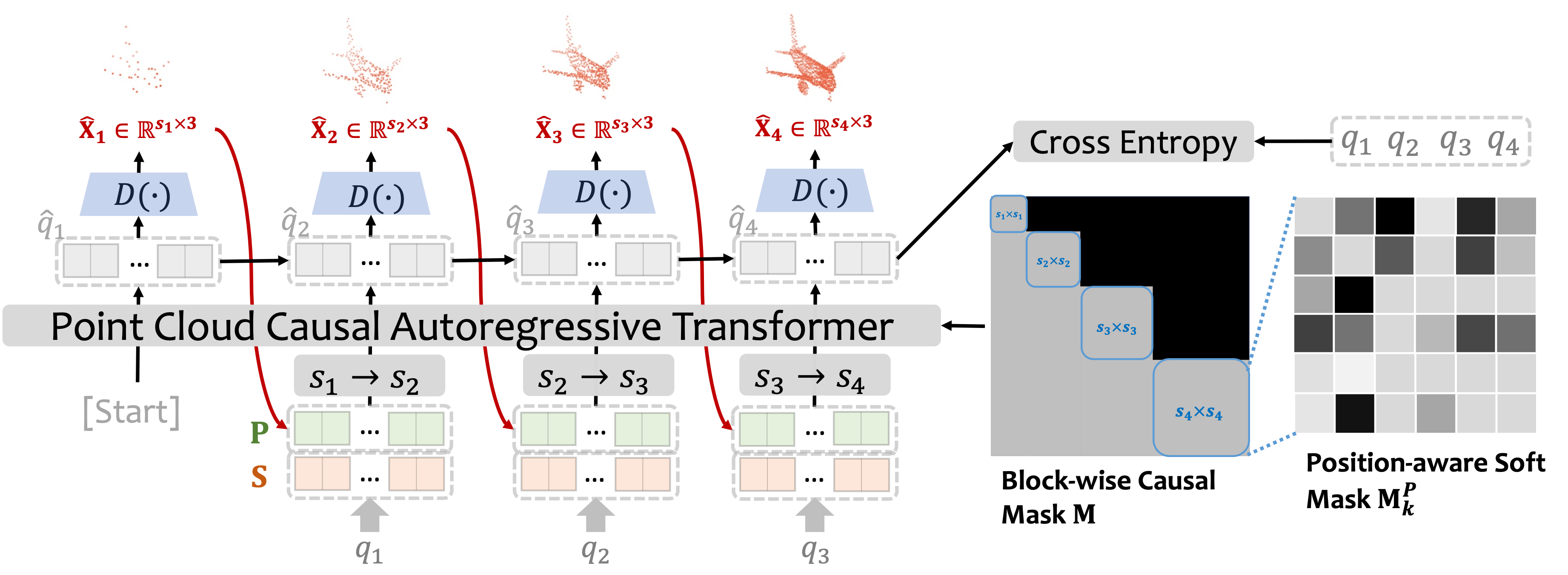

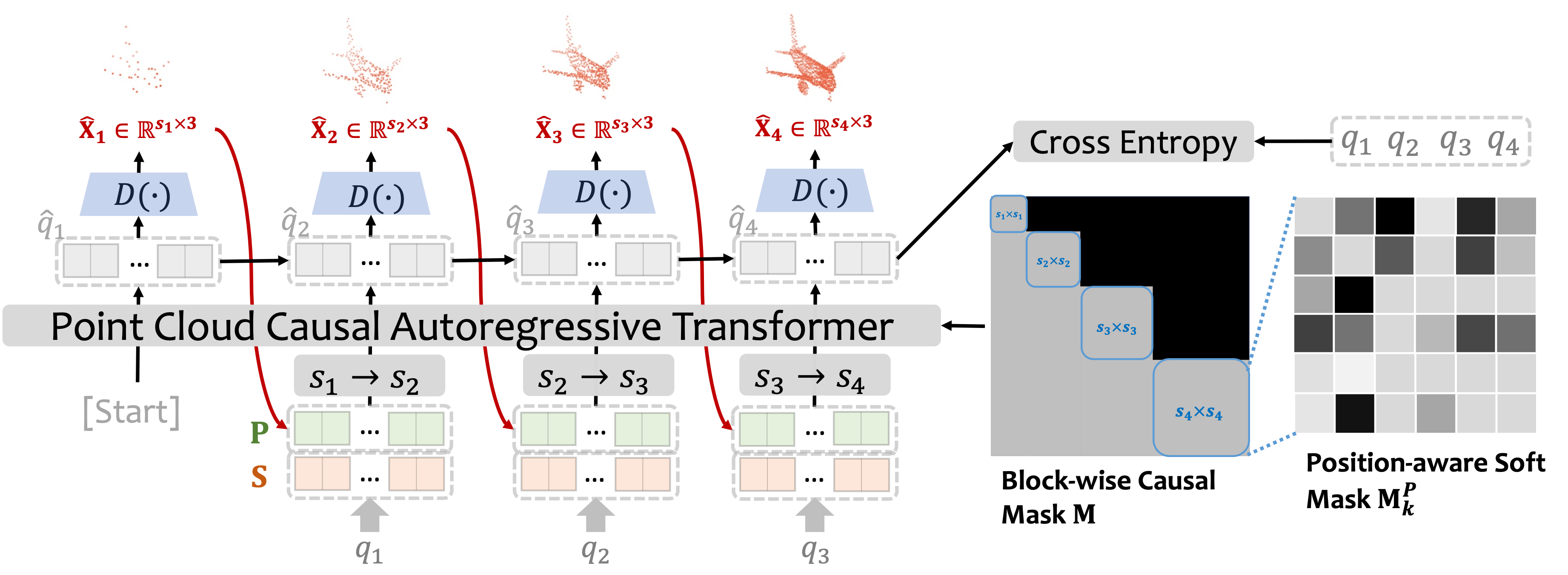

Illustration of training a multi-scale 3D point cloud causal transformer with intermediate decoding, upsampling, position-aware soft masks, and block-wise causal masks.

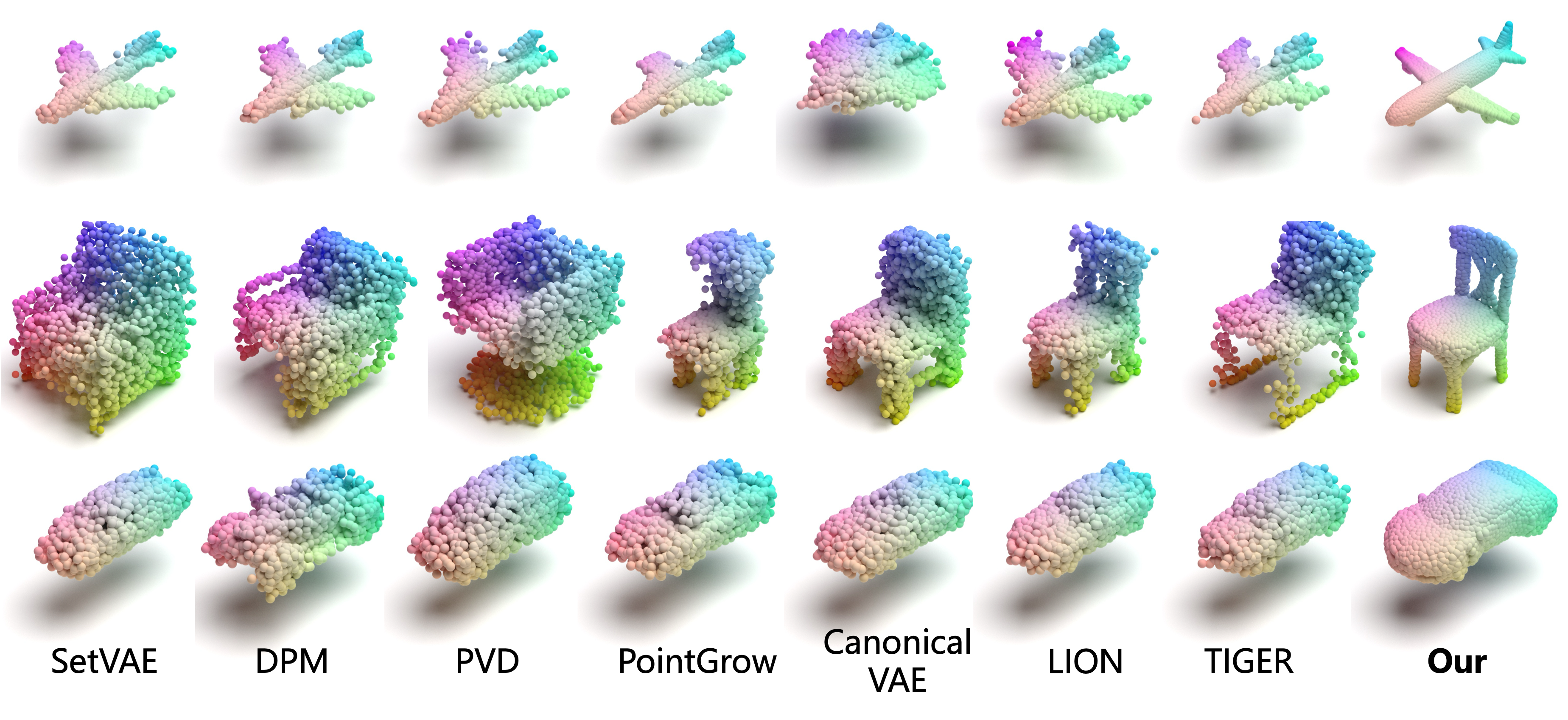

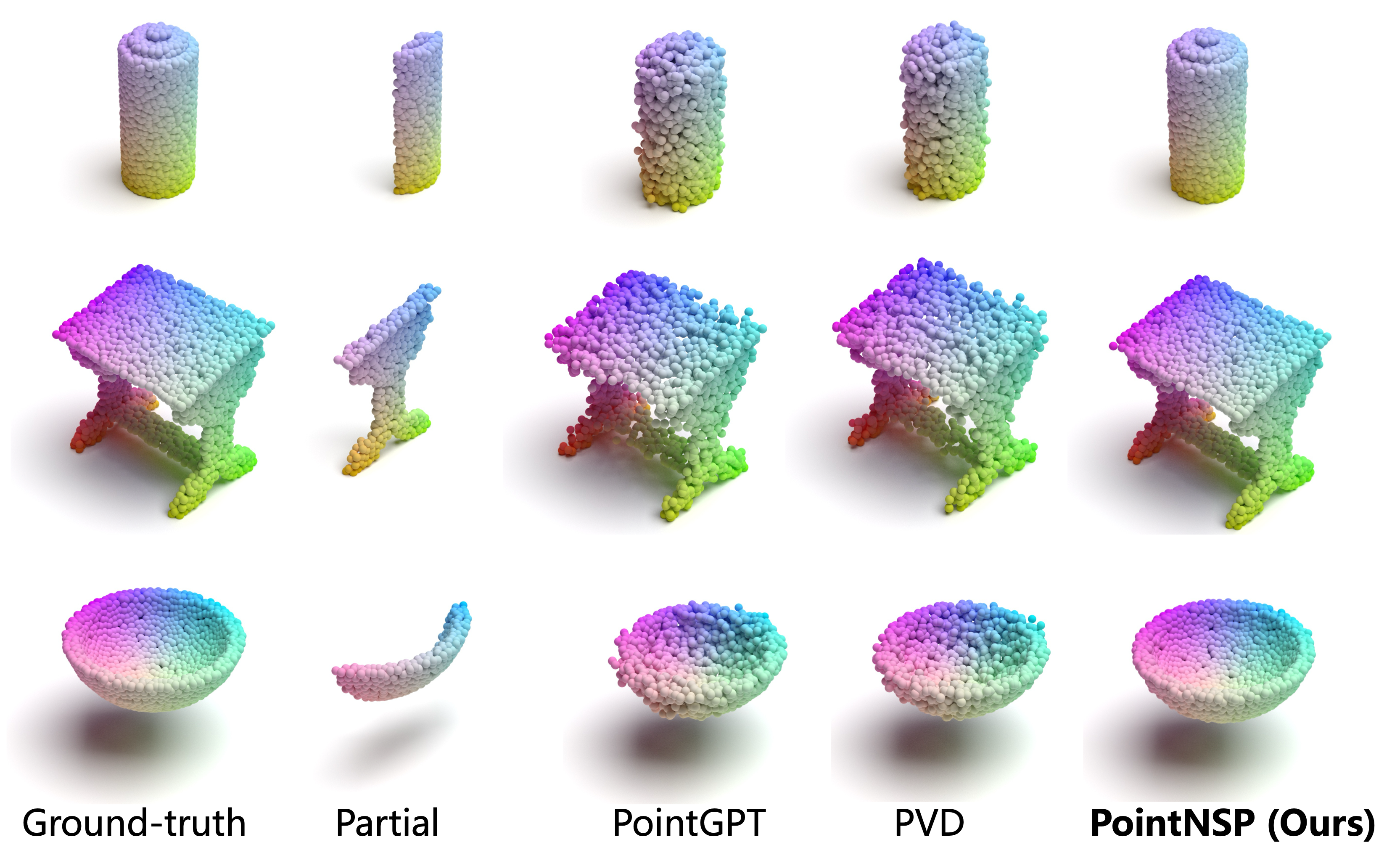

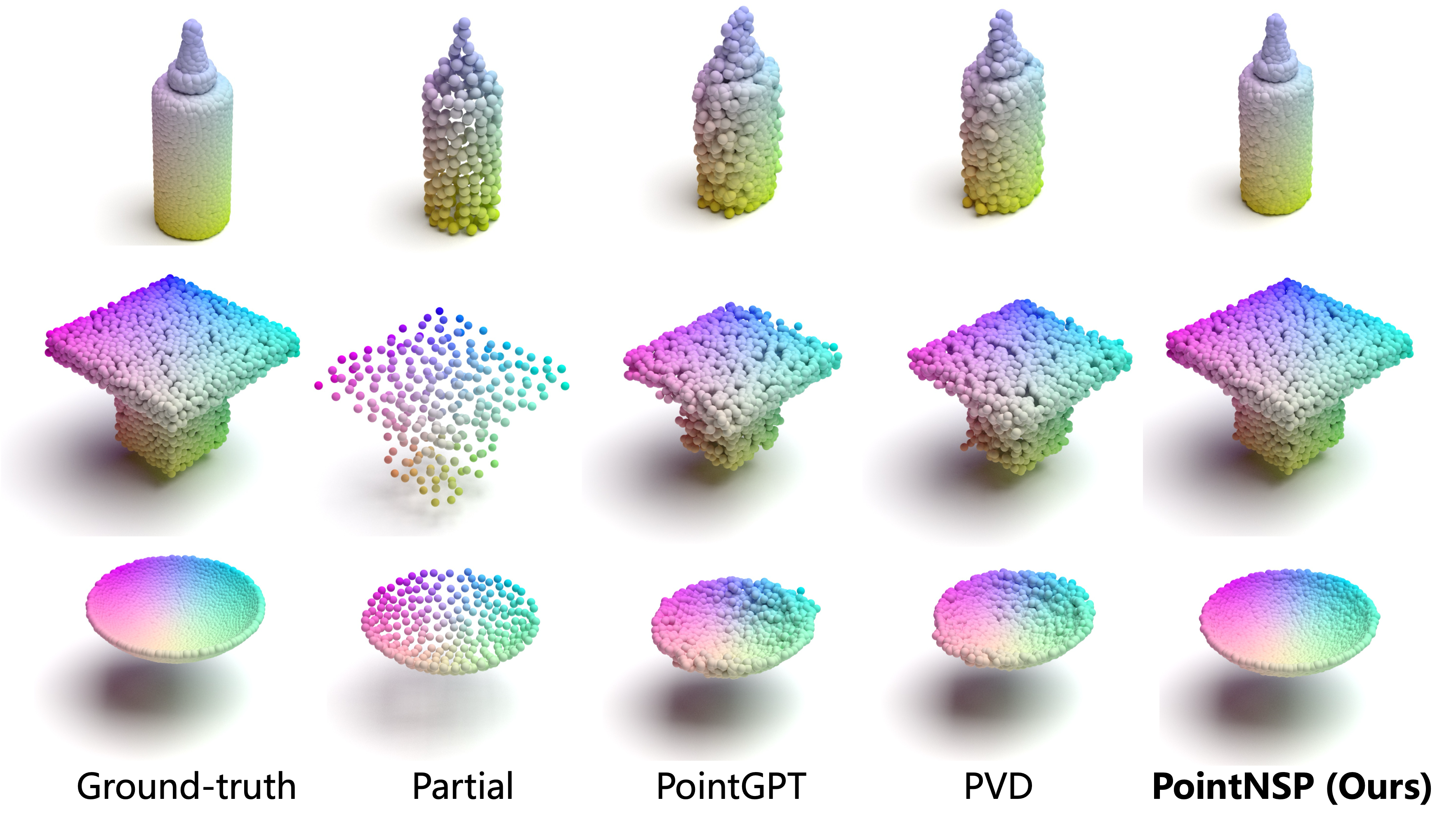

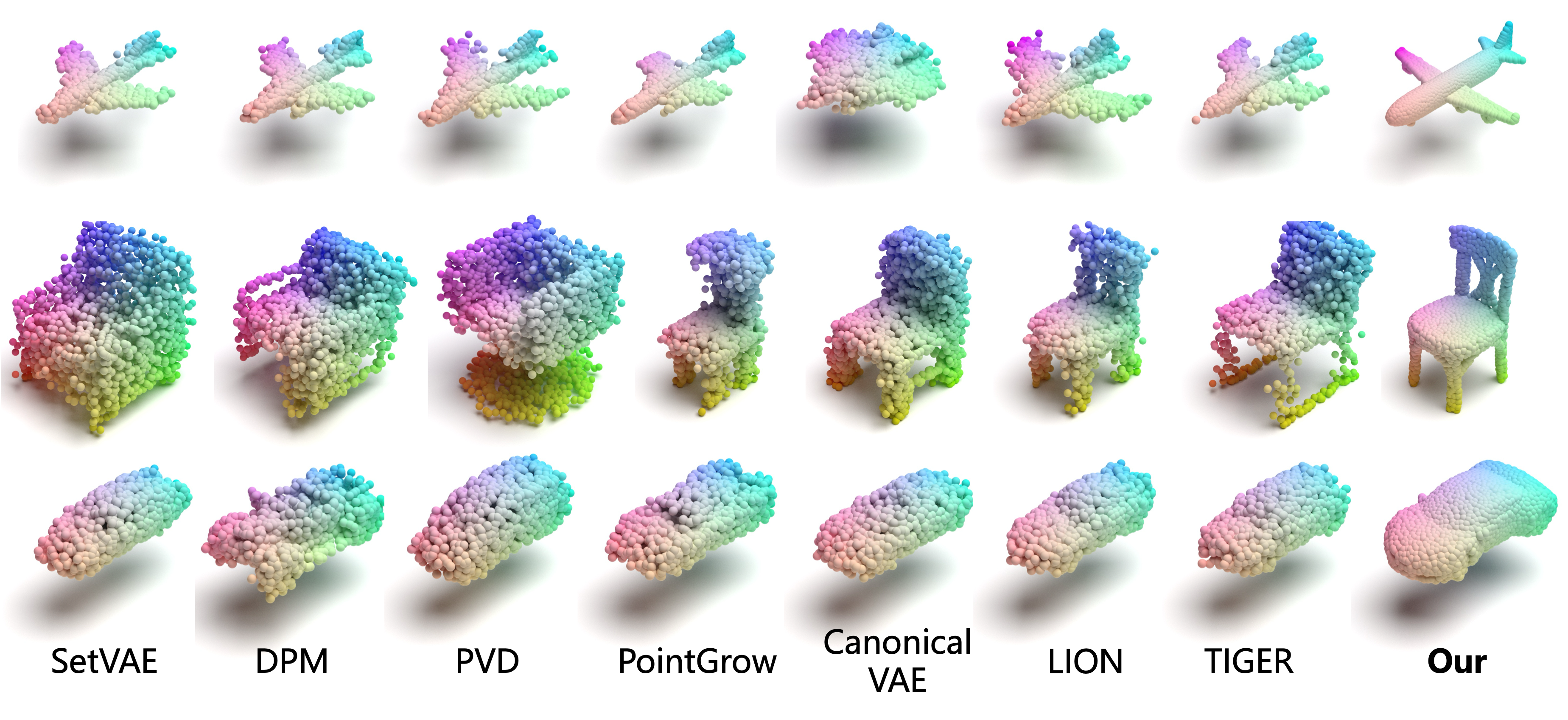

Our generation results (right) compared to baseline models (left). PointNSP generates high-quality and diverse 3D point clouds.

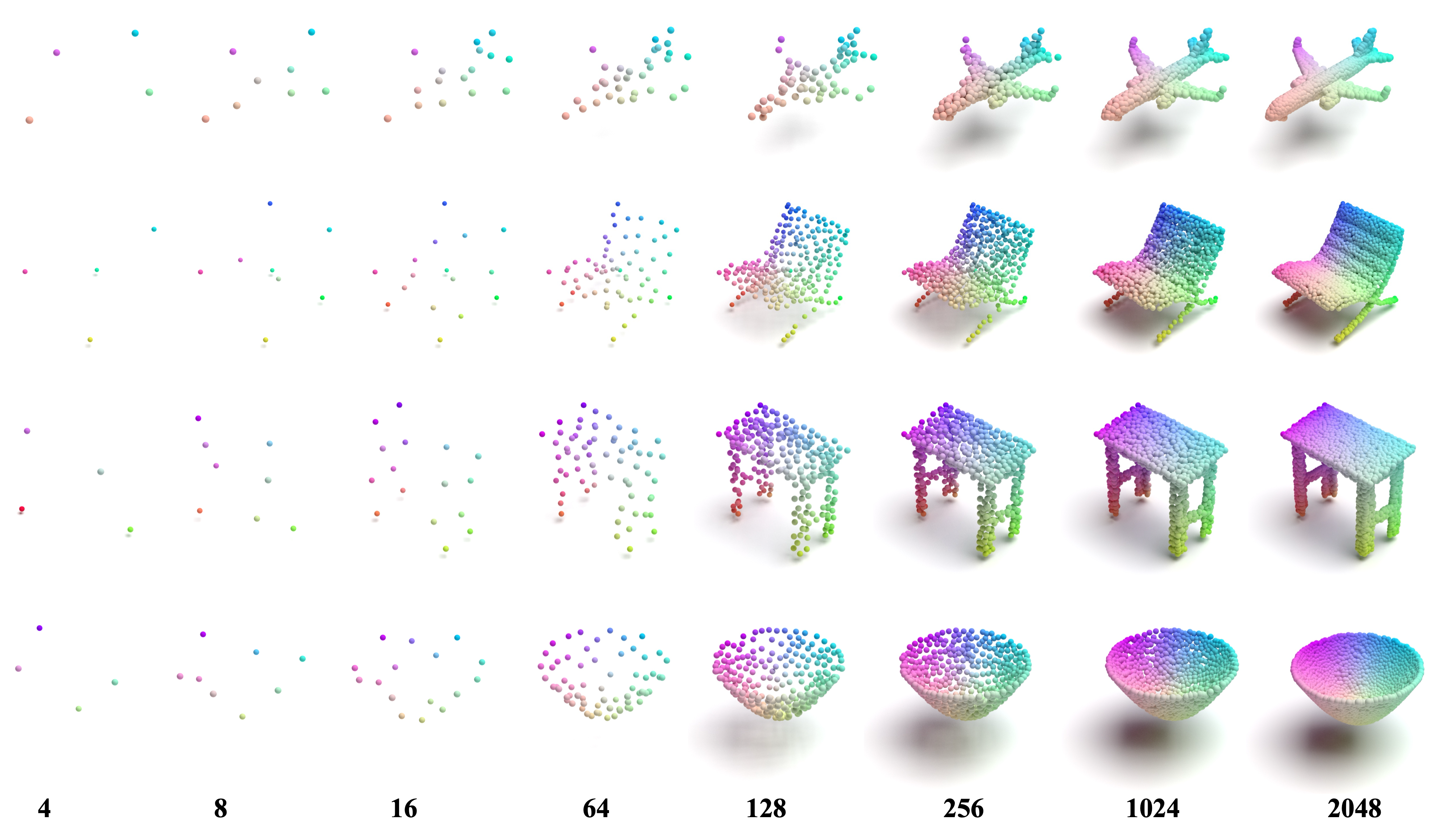

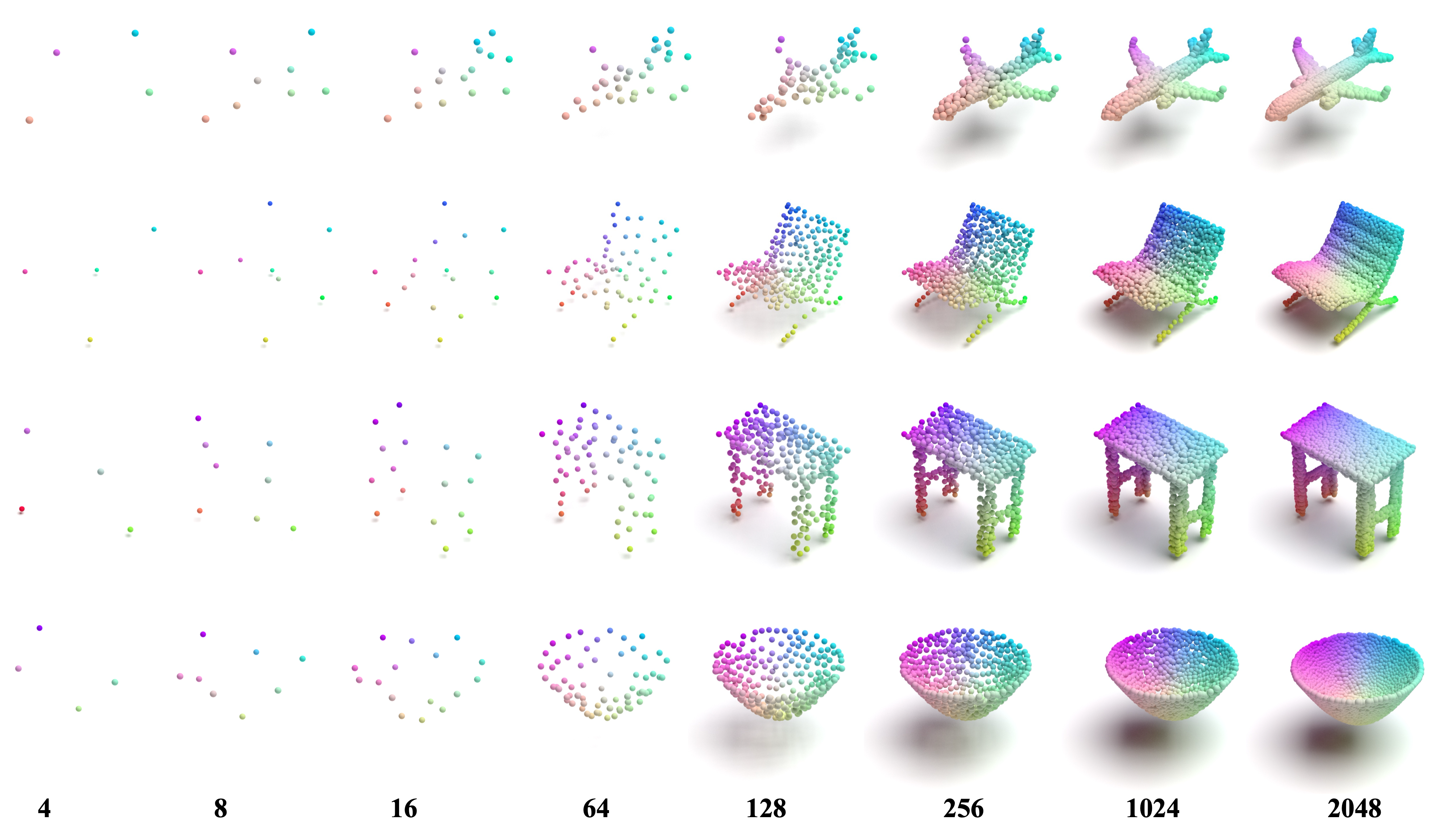

Visualization of multi-scale point clouds during the PointNSP generation process as the scale K increases.

Citation

To cite the paper, please use the below:

@misc{meng2025pointnspautoregressive3dpoint,

title={PointNSP: Autoregressive 3D Point Cloud Generation with Next-Scale Level-of-Detail Prediction},

author={Ziqiao Meng and Qichao Wang and Zhiyang Dou and Zixing Song and Zhipeng Zhou and Irwin King and Peilin Zhao},

year={2025},

eprint={2510.05613},

archivePrefix={arXiv},

primaryClass={cs.CV},

url={https://arxiv.org/abs/2510.05613},

}